There’s nothing cool or sexy about data backup, let’s be honest. But it’s probably more relevant and important today than ever before. At the same time, it’s also more achievable and reliable. In this series of articles, we’re going to look at the realities of backing up, what you can do to most effectively protect yourself from data loss and the various technologies that can be employed in that effort.

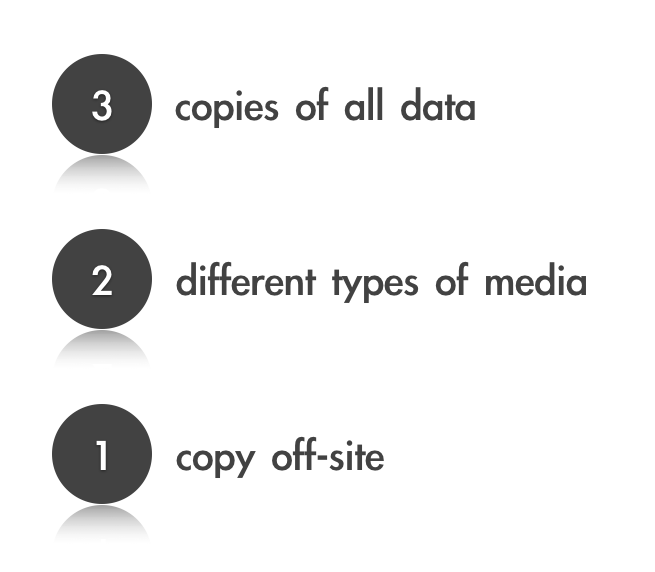

To start off, we’re going to look at the age-old 3-2-1 backup strategy. I don’t know who invented the concept or when, but it’s been around for a while, and we’ve generally regarded it as a sensible approach. It goes like this:-

Looking at this from a historical perspective, it’s easy to see the theory. If you’re old enough, cast your mind back to the days of drives that were measured in Megabytes and there were multiple physical data storage media, such as floppy drives, Zip drives, Jaz drives, MO drives, not to mention DDS and AIT tape formats. Personally, I remember these days with a sense of dread. Hard drives were far more prone to catastrophic failure than they are now. I can remember just one catastrophic drive failure (which was easily recoverable) in the last couple of years, whereas fifteen years ago, there were at least a few every year. Even if the drive itself didn’t fail, the interfaces just weren’t as reliable and could easily corrupt the data when they went wrong. Let’s not talk too much about SCSI right now - I’m about to have lunch…

And then when it did go wrong, you turned to your backup, which was on one of the equally unreliable media mentioned above. The chances of both your working copy and your backup copy being unreadable was extraordinarily high. That’s where the 3 + 2 comes from, and it makes sense, given the technology available at that time.

The “1” is obvious, and is as relevant today as it was then. An off-site copy of your data is critical to avoiding data loss in the event of, for example, fire and theft.

Putting this backup strategy in place at the turn of the millennium was a huge undertaking. Backup software was primitive, unreliable and disruptive. It generally required a high level of IT competence and, more importantly, methodology. In most cases, backup effectiveness depended on the diligence of the operator, and in most cases (in my personal experience), it proved ineffective when it mattered most.

These days, data storage is much cheaper, much more reliable, and backup software is more user friendly and less disruptive. With a few mouse clicks, you can have a basic backup strategy that is fundamentally superior to the most advanced backup systems of 15 years ago. Fundamentally superior? Yes, I really mean it. A backup strategy that requires little user intervention and works in the background without disrupting foreground tasks is better than a complex system that needs constant tweaking and monitoring, however many formats or copies it might produce.

So, is 3-2-1 still relevant? Well, it’s not a bad starting point, but it shouldn’t be regarded as the de facto standard. Today, it is more important to consider the actual risks and weigh them up against the demands of the protection on offer. To take a simple example, suppose you store your office documents “in the cloud”, using Google Drive, Dropbox or Sharepoint. That’s an off-site copy. Configured correctly, you’ll also have a local copy on your computer. Do you really need a third copy and another format? What sequence of events is going to deny you access to your office documents? Those services are protected so heavily that it would be some global catastrophe that would destroy both of your copies. You might feel you can take that risk.

In the next part, we’ll look at what your risks are and some strategies for avoiding data loss in each scenario. Try to stay awake.